WWDC: What’s new for App Clips within ARKit 5

Among Apple’s quietly significant WWDC 2021 announcements should be its planned enhancements to ARKit 5’s App Clip Codes function, which becomes a robust tool for just about any B2B or even B2C revenue enterprise.

Some things just appear to climb off the web page

Year when introduced last, the focus was on offering up usage of services and tools found within apps. All App Clip Codes are created available with a scannable pattern as well as perhaps an NFC. People scan the program code utilizing the NFC or digital camera to release the App Clip.

Year Apple provides enhanced AR support in App Clip and App Clip Codes this, that may now recognize and track App Clip Codes in AR experiences – so that you can run section of an AR experience minus the entire app.

What this signifies in customer experience conditions is a company can make an augmented reality encounter that becomes offered when a customer factors their camera at an App Code in something reference guide, on a poster, in the web pages of a magazine, at a trade show store – wherever they are needed by you to find this asset.

Apple offered up 2 primary real-world scenarios where it imagines making use of these codes:

-

- A tile company might use them so a person can preview various tile styles on the walls.

-

- A seed catalog could display an AR picture of what a developed plant or vegetable can look like, and could enable you to see virtual types of that greenery increasing in your backyard, via AR.

Both implementations seemed static fairly, but it’s possible to assume a lot more ambitious uses. They may be used to describe self assembly furniture, details car upkeep manuals, or even to provide virtual directions on a coffeemaker.

What’s an App Clip?

An app clip is really a little slice of an app that requires people through section of an app without needing to install the complete app. These app clips save download time and take people right to a specific area of the app that’s relevant to where they are at that time.

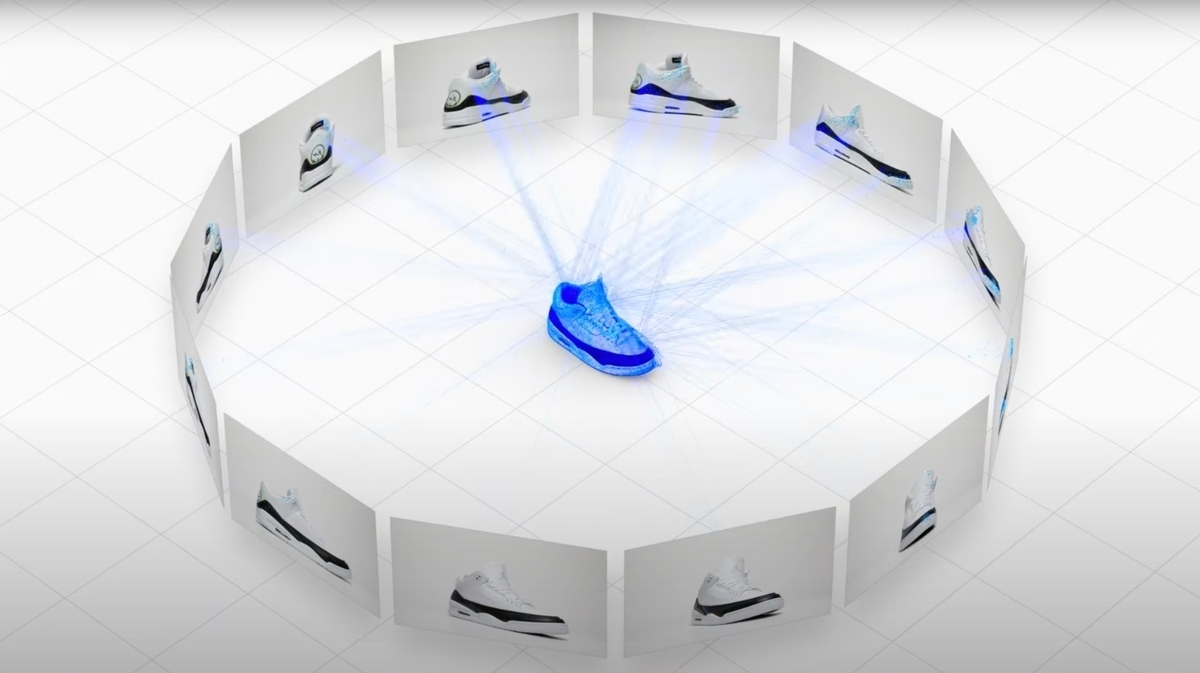

Object Catch

Apple introduced an important supporting tool with WWDC 2021 also, Object Capture inside RealityKit 2. This helps it be easier for developers to generate photo-realistic 3D types of real-world objects quickly making use of images captured on an iPhone, iPad, or DSLR.

What this essentially methods is that Apple has moved from empowering developers to create AR experiences which exist only within apps to the creation of AR encounters that work portably, pretty much outside of apps.

That’s significant since it helps create an ecosystem of AR assets, providers and experiences , which it’ll need since it attempts to drive in this space further.

Faster processors needed

It is important to understand the type of devices with the capacity of running such articles. When ARKit was introduced alongside iOS 11 first, Apple said it required at the very least an A9 processor chip to run. Things then have shifted since, and probably the most sophisticated functions in ARKit 5 need at the very least an A12 Bionic chip.

In this full case, App Clip Code tracking demands devices having an A12 Bionic processor chip or later, like the iPhone XS. These experiences require among Apple’s newer processors is noteworthy because the company inexorably drives toward start of AR glasses .

It lends compound to knowing Apple’s strategic choice to purchase chip development. In the end, the shift from A10 Fusion to A11 processors yielded a 25% performance get . At this true point, Apple appears to be achieving a similar benefits with each iteration of its chips roughly. We should notice another leapfrog in efficiency per watt as soon as it moves to 3nm chips in 2022 – and these advancements in capability are actually available across its systems, because of M-series Mac chips.

Despite all of this charged power, Apple warns that decoding these clips usually takes time, so that it suggests developers provide a placeholder visualization as the magic happens.

What else is brand-new in ARKit 5?

Along with App Clip Codes, ARKit 5 advantages from:

Place Anchors

It’s possible to put AR content at particular geographic locations now, tying the knowledge to a Maps longitude/latitude measurement. This feature also requires an A12 processor or and can be acquired at key U later.S. metropolitan areas and in London.

This implies that you could be in a position to wander round and get AR experiences simply by pointing your camera at an indicator, or checking a spot in Maps. This sort of overlaid reality needs to be a hint at the company’s plans, particularly consistent with its enhancements in accessibility , person reputation , and strolling directions.

Motion capture enhancements

ARKit 5 can a lot more accurately track entire body joints on longer distances now. Motion capture also even more precisely supports a wider selection of limb actions and entire body poses on A12 or later on processors. No code modification is required, that ought to mean any app that utilizes motion capture in this manner will reap the benefits of better accuracy as soon as iOS 15 is launched.

Furthermore study:

Please stick to me on Twitter , or sign up for me in the AppleHolic’s bar & grill and Apple Discussions groupings on MeWe.