Tools to Help You Deliver A Machine Learning Platform And Address Skill Gaps

As we learned from the previous two blog posts in this series, Public Clouds have set the pace and standards for satisfying Data Scientist’s technology needs, but on-premise offerings are starting to be viable using innovations such as Kubernetes and Kubeflow.

…but it still can be hard!

With expectations set very high in Public Cloud, ML platforms delivered on-premise by IT teams have been made even more difficult because the automation flows and their associated tooling to power these, have been well-hidden behind public cloud customer consoles and therefore, the process to replicate these is not very obvious.

Even though abstraction technologies, such as Kubernetes, reflect and relate well to the underlying infrastructure, the education needed to bridge current Data Center skills over to cloud native tools takes enthusiasm and persistence in the face of potential frustration as these technology ‘stacks’ are learned and mastered.

Considering this, the Cisco community has developed an open source tool named “MLAnywhere” to assist with the skills needed for cloud native ML platforms. MLAnywhere provides an actual, usable deployed Kubeflow workflow (pipeline) with sample ML applications, all of this on top of Kubernetes via a clean and intuitive interface. As well as addressing the educational aspects for IT teams, it significantly speeds up and automates the deployment of a Kubeflow environment including many of the unseen essential aspects.

How MLAnywhere works

MLAnywhere is a simple Microservice, built using container technologies, and designed to be easily installed, maintained and evolved. The fundamental goal of this open-source project is to help IT teams understand what it takes to configure these environments whilst providing the Data Scientist a usable platform, including real world examples of ML code built into the tool via Jupyter Notebook samples.

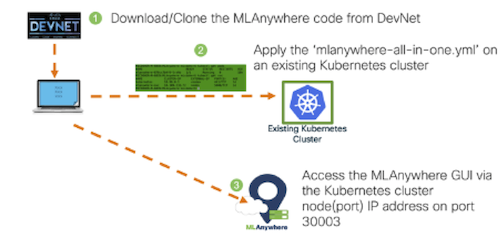

The installation process is very straight forward — simply download the project files from the Cisco DevNet repository, follow the instructions to build a container using a Dockerfile, and launch the resulting container on an existing Kubernetes cluster.

MLAnywhere layers on top of technologies such as the Cisco Container Platform, a Kubernetes cluster management solution. Cisco Container Platform greatly simplifies both day-1 deployment, and day-2 operations of Kubernetes and does so in a secure, production-grade and fully- supported fashion.

Importantly for ML workloads, Cisco Container Platform also eases the burden of having to align GPU drivers and software as MLAnywhere uses the Cisco Container Platform provided APIs to seamlessly consume the underlying GPU resources upon the deployment of the supporting Kubernetes clusters, and exposes these into the Kubeflow tools.

So what’s in it for IT Operations teams?

For IT teams, clear descriptive explanatory steps are presented within the ML interface for deploying the relevant elements, including the all-important logging information to help educate the user on what is going on under the surface, and what it takes within the underlying Kubernetes platform to prepare, deploy and run the Kubeflow tooling.

Not forgetting the Data Scientists

On the Data Scientist’s side, many will have experience using traditional methodologies in the ML space and therefore will see the benefits that container technology can bring in areas such as dependencies, environment variables management and GPU driver deployments. But importantly, they get to do this whilst leveraging the scale and speed that Kubernetes brings, from the comfort of the abstraction away from the infrastructure, and still uses well known frameworks such as Tensorflow and Pytorch.

As the ML engineers and data scientists are generally more concerned about getting access to the actual dashboards and tools than the underlying plumbing, appropriate links are provided within MLAnywhere to the Kubeflow interface as the environments are dynamically built out on-demand.

What does the future hold?

Hopefully you can see that MLAnywhere can bring quick and instant value to various teams involved in the ML process with a focus on the educational aspects helping Data Scientists and IT Operation teams make the transition over to cloud native methodologies.

Moving forward, we will continue to add further nuggets of value into MLA but an important aspect to point out is we intend to merge this project with another Cisco initiative around Kubeflow called “The Cisco Kubeflow Starter Pack” as these two complementary approaches when combined, will bring their best aspects together into a compelling open source project.

Finally, we will leave you with a practical note, a well used phrase in the ML world is “it takes many months to deliver an ML platform into the hands of data scientists”, MLAnywhere can do this in less than 30 minutes!!

For more information and to download: The MLAnywhere code repository and installation instructions

Catchup with part 1 and part 2 in this series.

The post Tools to Help You Deliver A Machine Learning Platform And Address Skill Gaps appeared first on Cisco Blogs.

You must be logged in to post a comment.