Strategies for achieving least privilege at scale – Part 2

In this post, we continue with our recommendations for achieving least privilege at scale with AWS Identity and Access Management (IAM). In Part 1 of this two-part series, we described the first five of nine strategies for implementing least privilege in IAM at scale. We also looked at a few mental models that can assist you to scale your approach. In this post, Part 2, we’ll continue to look at the remaining four strategies and related mental models for scaling least privilege across your organization.

6. Empower developers to author application policies

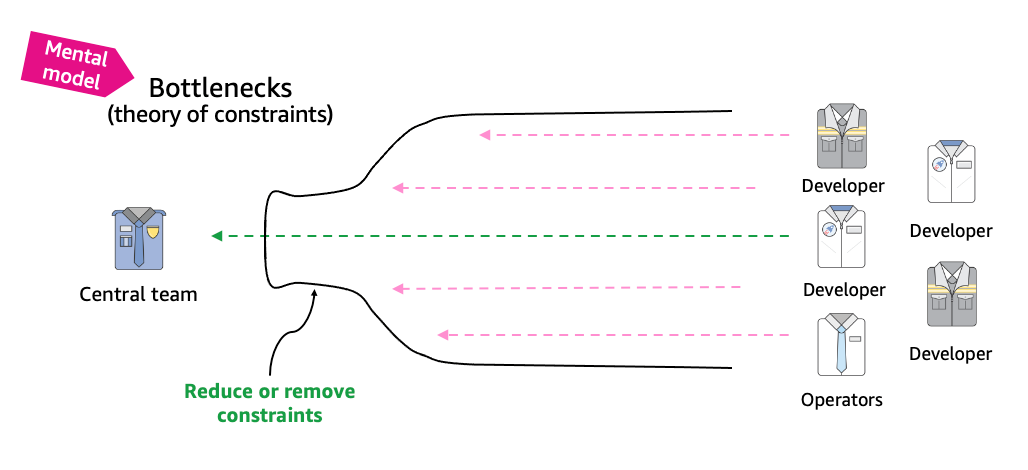

If you’re the only developer working in your cloud environment, then you naturally write your own IAM policies. However, a common trend we’ve seen within organizations that are scaling up their cloud usage is that a centralized security, identity, or cloud team administrator will step in to help developers write customized IAM policies on behalf of the development teams. This may be due to variety of reasons, including unfamiliarity with the policy language or a fear of creating potential security risk by granting excess privileges. Centralized creation of IAM policies might work well for a while, but as the team or business grows, this practice often becomes a bottleneck, as indicated in Figure 1.

Figure 1: Bottleneck in a centralized policy authoring process

This mental model is known as the theory of constraints. With this model in mind, you should be keen to search for constraints, or bottlenecks, faced by your team or organization, identify the root cause, and solve for the constraint. That might sound obvious, but when you’re moving at a fast pace, the constraint might not appear until agility is already impaired. As your organization grows, a process that worked years ago might no longer be effective today.

A software developer generally understands the intent of the applications they build, and to some extent the permissions required. At the same time, the centralized cloud, identity, or security teams tend to feel they are the experts at safely authoring policies, but lack a deep knowledge of the application’s code. The goal here is to enable developers to write the policies in order to mitigate bottlenecks.

The question is, how do you equip developers with the right tools and skills to confidently and safely create the required policies for their applications? A simple way to start is by investing in training. AWS offers a variety of formal training options and ramp-up guides that can help your team gain a deeper understanding of AWS services, including IAM. However, even self-hosting a small hackathon or workshop session in your organization can drive improved outcomes. Consider the following four workshops as simple options for self-hosting a learning series with your teams.

- How and when to use different IAM policy types workshop – Learn when to use which policy type, and who should own and manage the policy.

- IAM policy learning experience workshop – Learn how to write different types of IAM policies and implement access controls on principals and resources, using conditions to scope down access.

- IAM troubleshooting workshop – Learn how to create fine-grained access policies with the help of the IAM API, AWS Management Console, IAM Access Analyzer, and AWS CloudTrail, and review key concepts of the IAM policy evaluation logic.

- Refining IAM Permissions Like A Pro – Learn how to use IAM Access Analyzer programmatically, use tools to check IAM policies in CI/CD pipeline and AWS Lambda functions, and get hands-on practice in using the tools from the perspectives of both Security and DevOps teams.

As a next step, you can help your teams along the way by setting up processes that foster collaboration and improve quality. For example, peer reviews are highly recommended, and we’ll cover this later. Additionally, administrators can use AWS native tools such as permissions boundaries and IAM Access Analyzer policy generation to help your developers begin to author their own policies more safely.

Let’s look at permissions boundaries first. An IAM permissions boundary should generally be used to delegate the responsibility of policy creation to your development team. You can set up the developer’s IAM role so that they can create new roles only if the new role has a specific permissions boundary attached to it, and that permissions boundary allows you (as an administrator) to set the maximum permissions that can be granted by the developer. This restriction is implemented by a condition on the developer’s identity-based policy, requiring that specific actions—such as iam:CreateRole or iam:CreatePolicy—are allowed only if a specified permissions boundary is attached.

In this way, when a developer creates an IAM role or policy to grant an application some set of required permissions, they are required to add the specified permissions boundary that will “bound” the maximum permissions available to that application. So even if the policy that the developer creates—such as for their AWS Lambda function—is not sufficiently fine-grained, the permissions boundary helps the organization’s cloud administrators make sure that the Lambda function’s policy is not greater than a maximum set of predefined permissions. So with permissions boundaries, your development team can be allowed to create new roles and policies (with constraints) without administrators creating a manual bottleneck.

Another tool developers can use is IAM Access Analyzer policy generation. IAM Access Analyzer reviews your CloudTrail logs and autogenerates an IAM policy based on your access activity over a specified time range. This greatly simplifies the process of writing granular IAM policies that allow end users access to AWS services.

A classic use case for IAM Access Analyzer policy generation is to generate an IAM policy within the test environment. This provides a good starting point to help identify the needed permissions and refine your policy for the production environment. For example, IAM Access Analyzer can’t identify the production resources used, so it adds resource placeholders for you to modify and add the specific Amazon Resource Names (ARNs) your application team needs. However, not every policy needs to be customized, and the next strategy will focus on reusing some policies.

7. Maintain well-written policies

Strategies seven and eight focus on processes. The first process we’ll focus on is to maintain well-written policies. To begin, not every policy needs to be a work of art. There is some wisdom in reusing well-written policies across your accounts, because that can be an effective way to scale permissions management. There are three steps to approach this task:

- Identify your use cases

- Create policy templates

- Maintain repositories of policy templates

For example, if you were new to AWS and using a new account, we would recommend that you use AWS managed policies as a reference to get started. However, the permissions in these policies might not fit how you intend to use the cloud as time progresses. Eventually, you would want to identify the repetitive or common use cases in your own accounts and create common policies or templates for those situations.

When creating templates, you must understand who or what the template is for. One thing to note here is that the developer’s needs tend to be different from the application’s needs. When a developer is working with resources in your accounts, they often need to create or delete resources—for example, creating and deleting Amazon Simple Storage Service (Amazon S3) buckets for the application to use.

Conversely, a software application generally needs to read or write data—in this example, to read and write objects to the S3 bucket that was created by the developer. Notice that the developer’s permissions needs (to create the bucket) are different than the application’s needs (reading objects in the bucket). Because these are different access patterns, you’ll need to create different policy templates tailored to the different use cases and entities.

Figure 2 highlights this issue further. Out of the set of all possible AWS services and API actions, there are a set of permissions that are relevant for your developers (or more likely, their DevOps build and delivery tools) and there’s a set of permissions that are relevant for the software applications that they are building. Those two sets may have some overlap, but they are not identical.

Figure 2: Visualizing intersecting sets of permissions by use case

When discussing policy reuse, you’re likely already thinking about common policies in your accounts, such as default federation permissions for team members or automation that runs routine security audits across multiple accounts in your organization. Many of these policies could be considered default policies that are common across your accounts and generally do not vary. Likewise, permissions boundary policies (which we discussed earlier) can have commonality across accounts with low amounts of variation. There’s value in reusing both of these sets of policies. However, reusing policies too broadly could cause challenges if variation is needed—to make a change to a “reusable policy,” you would have to modify every instance of that policy, even if it’s only needed by one application.

You might find that you have relatively common resource policies that multiple teams need (such as an S3 bucket policy), but with slight variations. This is where you might find it useful to create a repeatable template that abides by your organization’s security policies, and make it available for your teams to copy. We call it a template here, because the teams might need to change a few elements, such as the Principals that they authorize to access the resource. The policies for the applications (such as the policy a developer creates to attach to an Amazon Elastic Compute Cloud (Amazon EC2) instance role) are generally more bespoke or customized and might not be appropriate in a template.

Figure 3 illustrates that some policies have low amounts of variation while others are more bespoke.

Figure 3: Identifying bespoke versus common policy types

Regardless of whether you choose to reuse a policy or turn it into a template, an important step is to store these reusable policies and templates securely in a repository (in this case, AWS CodeCommit). Many customers use infrastructure-as-code modules to make it simple for development teams to input their customizations and generate IAM policies that fit their security policies in a programmatic way. Some customers document these policies and templates directly in the repository while others use internal wikis accompanied with other relevant information. You’ll need to decide which process works best for your organization. Whatever mechanism you choose, make it accessible and searchable by your teams.

8. Peer review and validate policies

We mentioned in Part 1 that least privilege is a journey and having a feedback loop is a critical part. You can implement feedback through human review, or you can automate the review and validate the findings. This is equally as important for the core default policies as it is for the customized, bespoke policies.

Let’s start with some automated tools you can use. One great tool that we recommend is using AWS IAM Access Analyzer policy validation and custom policy checks. Policy validation helps you while you’re authoring your policy to set secure and functional policies. The feature is available through APIs and the AWS Management Console. IAM Access Analyzer validates your policy against IAM policy grammar and AWS best practices. You can view policy validation check findings that include security warnings, errors, general warnings, and suggestions for your policy.

Let’s review some of the finding categories.

| Finding type | Description |

| Security | Includes warnings if your policy allows access that AWS considers a security risk because the access is overly permissive. |

| Errors | Includes errors if your policy includes lines that prevent the policy from functioning. |

| Warning | Includes warnings if your policy doesn’t conform to best practices, but the issues are not security risks. |

| Suggestions | Includes suggestions if AWS recommends improvements that don’t impact the permissions of the policy. |

Custom policy checks are a new IAM Access Analyzer capability that helps security teams accurately and proactively identify critical permissions in their policies. You can use this to check against a reference policy (that is, determine if an updated policy grants new access compared to an existing version of the policy) or check against a list of IAM actions (that is, verify that specific IAM actions are not allowed by your policy). Custom policy checks use automated reasoning, a form of static analysis, to provide a higher level of security assurance in the cloud.

One technique that can help you with both peer reviews and automation is the use of infrastructure-as-code. By this, we mean you can write and deploy your IAM policies as AWS CloudFormation templates (CFTs) or AWS Cloud Development Kit (AWS CDK) applications. You can use a software version control system with your templates so that you know exactly what changes were made, and then test and deploy your default policies across multiple accounts, such as by using AWS CloudFormation StackSets.

In Figure 4, you’ll see a typical development workflow. This is a simplified version of a CI/CD pipeline with three stages: a commit stage, a validation stage, and a deploy stage. In the diagram, the developer’s code (including IAM policies) is checked across multiple steps.

Figure 4: A pipeline with a policy validation step

In the commit stage, if your developers are authoring policies, you can quickly incorporate peer reviews at the time they commit to the source code, and this creates some accountability within a team to author least privilege policies. Additionally, you can use automation by introducing IAM Access Analyzer policy validation in a validation stage, so that the work can only proceed if there are no security findings detected. To learn more about how to deploy this architecture in your accounts, see this blog post. For a Terraform version of this process, we encourage you to check out this GitHub repository.

9. Remove excess privileges over time

Our final strategy focuses on existing permissions and how to remove excess privileges over time. You can determine which privileges are excessive by analyzing the data on which permissions are granted and determining what’s used and what’s not used. Even if you’re developing new policies, you might later discover that some permissions that you enabled were unused, and you can remove that access later. This means that you don’t have to be 100% perfect when you create a policy today, but can rather improve your policies over time. To help with this, we’ll quickly review three recommendations:

- Restrict unused permissions by using service control policies (SCPs)

- Remove unused identities

- Remove unused services and actions from policies

First, as discussed in Part 1 of this series, SCPs are a broad guardrail type of control that can deny permissions across your AWS Organizations organization, a set of your AWS accounts, or a single account. You can start by identifying services that are not used by your teams, despite being allowed by these SCPs. You might also want to identify services that your organization doesn’t intend to use. In those cases, you might consider restricting that access, so that you retain access only to the services that are actually required in your accounts. If you’re interested in doing this, we’d recommend that you review the Refining permissions in AWS using last accessed information topic in the IAM documentation to get started.

Second, you can focus your attention more narrowly to identify unused IAM roles, unused access keys for IAM users, and unused passwords for IAM users either at an account-specific level or the organization-wide level. To do this, you can use IAM Access Analyzer’s Unused Access Analyzer capability.

Third, the same Unused Access Analyzer capability also enables you to go a step further to identify permissions that are granted but not actually used, with the goal of removing unused permissions. IAM Access Analyzer creates findings for the unused permissions. If the granted access is required and intentional, then you can archive the finding and create an archive rule to automatically archive similar findings. However, if the granted access is not required, you can modify or remove the policy that grants the unintended access. The following screenshot shows an example of the dashboard for IAM Access Analyzer’s unused access findings.

Figure 5: Screenshot of IAM Access Analyzer dashboard

When we talk to customers, we often hear that the principle of least privilege is great in principle, but they would rather focus on having just enough privilege. One mental model that’s relevant here is the 80/20 rule (also known as the Pareto principle), which states that 80% of your outcome comes from 20% of your input (or effort). The flip side is that the remaining 20% of outcome will require 80% of the effort—which means that there are diminishing returns for additional effort. Figure 6 shows how the Pareto principle relates to the concept of least privilege, on a scale from maximum privilege to perfect least privilege.

Figure 6: Applying the Pareto principle (80/20 rule) to the concept of least privilege

The application of the 80/20 rule to permissions management—such as refining existing permissions—is to identify what your acceptable risk threshold is and to recognize that as you perform additional effort to eliminate that risk, you might produce only diminishing returns. However, in pursuit of least privilege, you’ll still want to work toward that remaining 20%, while being pragmatic about the remainder of the effort.

Remember that least privilege is a journey. Two ways to be pragmatic along this journey are to use feedback loops as you refine your permissions, and to prioritize. For example, focus on what is sensitive to your accounts and your team. Restrict access to production identities first before moving to environments with less risk, such as development or testing. Prioritize reviewing permissions for roles or resources that enable external, cross-account access before moving to the roles that are used in less sensitive areas. Then move on to the next priority for your organization.

Conclusion

Thank you for taking the time to read this two-part series. In these two blog posts, we described nine strategies for implementing least privilege in IAM at scale. Across these nine strategies, we introduced some mental models, tools, and capabilities that can assist you to scale your approach. Let’s consider some of the key takeaways that you can use in your journey of setting, verifying, and refining permissions.

Cloud administrators and developers will set permissions, and can use identity-based policies or resource-based policies to grant access. Administrators can also use multiple accounts as boundaries, and set additional guardrails by using service control policies, permissions boundaries, block public access, VPC endpoint policies, and data perimeters. When cloud administrators or developers create new policies, they can use IAM Access Analyzer’s policy generation capability to generate new policies to grant permissions.

Cloud administrators and developers will then verify permissions. For this task, they can use both IAM Access Analyzer’s policy validation and peer review to determine if the permissions that were set have issues or security risks. These tools can be leveraged in a CI/CD pipeline too, before the permissions are set. IAM Access Analyzer’s custom policy checks can be used to detect nonconformant updates to policies.

To both verify existing permissions and refine permissions over time, cloud administrators and developers can use IAM Access Analyzer’s external access analyzers to identify resources that were shared with external entities. They can also use either IAM Access Advisor’s last accessed information or IAM Access Analyzer’s unused access analyzer to find unused access. In short, if you’re looking for a next step to streamline your journey toward least privilege, be sure to check out IAM Access Analyzer.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.

You must be logged in to post a comment.