Ransomware mitigation: Top 5 protections and recovery preparation actions

In this article, I’ll cover the very best five items that Amazon Web Services (AWS) customers can perform to greatly help protect and recover their resources from ransomware. This website post focuses specifically on preemptive actions you could take.

#1 – Create the capability to recover your apps and data

For a normal encrypt-in-place ransomware try to achieve success, the actor in charge of the attempt should be in a position to prevent you from accessing your computer data, and then hold your computer data for ransom. The first thing that you ought to do to safeguard your account would be to ensure that you be capable of recover your data, regardless of how it had been made inaccessible. Backup solutions protect and restore data, and disaster recovery (DR) solutions offer fast recovery of data and workloads.

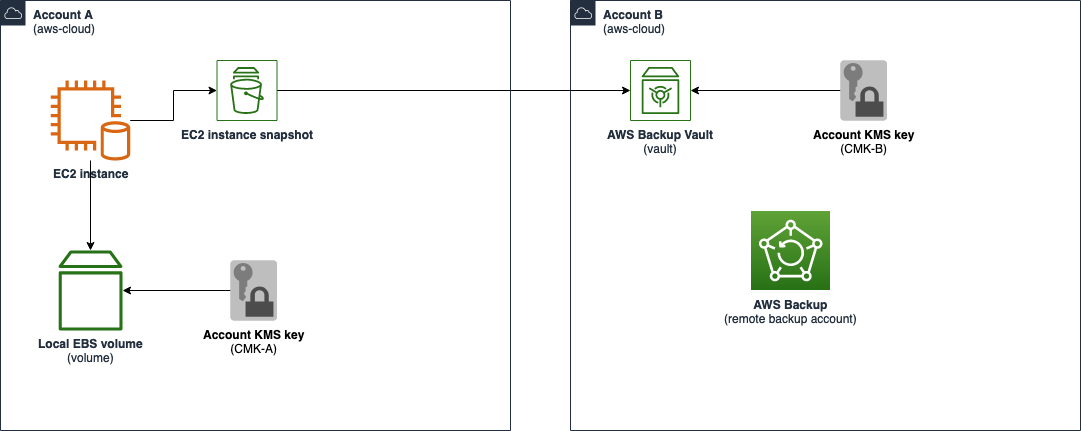

AWS makes this technique significantly easier for you personally with services like AWS Backup, or CloudEndure Disaster Recovery, that offer robust infrastructure DR. I’ll review ways to use both these services to greatly help recover your data. When you select a data backup solution, simply developing a snapshot of an Amazon Elastic Compute Cloud (Amazon EC2) instance isn’t enough. A robust function of the AWS Backup service is that whenever you develop a backup vault, you should use another customer master key (CMK) in the AWS Key Management Service (AWS KMS). That is powerful as the CMK might have a key policy which allows AWS operators to utilize the main element to encrypt the backup, nevertheless, you can limit decryption to a totally different principal.

In Figure 1, I show a merchant account that locally encrypted their EC2 Amazon Elastic Block Store (Amazon EBS) volume through the use of CMK A, but AWS Backup uses CMK B. If an individual in account A with a decrypt grant on CMK A attempts to gain access to the backup, even though an individual is authorized by the AWS Identity and Access Management (IAM) principal access policy, the CMK policy won’t allow usage of the encrypted data.

Figure 1: A merchant account using AWS Backup that stores data in another account with different key material

In the event that you place the backup or replication right into a separate account that’s dedicated simply for backup, this also really helps to reduce the likelihood a threat actor can destroy or tamper with the backup. AWS Backup < now;a href=”https://docs.aws.amazon.com/aws-backup/latest/devguide/cross-account-backup.html” target=”_blank” rel=”noopener noreferrer”>supports< natively;/a> this cross-account capability, making the backup process even easier. The AWS Backup Developer Guide provides instructions for by using this functionality, along with the policy that you’ll need to apply.

Ensure that you’re backing up your computer data in every supported services and your backup schedule is dependant on your organization recovery time objective (RTO) and recovery point objective (RPO).

Now, let’s have a look at how CloudEndure Disaster Recovery works.

Figure 2: A synopsis of how CloudEndure Disaster Recovery works

The high-level architecture diagram in Figure 2 illustrates how CloudEndure Disaster Recovery keeps your complete on-premises environment in sync with replicas in AWS and prepared to fail to AWS anytime, with aggressive recovery objectives and significantly reduced total cost of ownership (TCO). On the left may be the source environment, which may be composed of various kinds of applications-in this case, I give Oracle databases and SQL Servers as examples. And even though I’m highlighting DR from on-premises to AWS in this example, CloudEndure Disaster Recovery can offer exactly the same functionality and improved recovery performance between AWS Regions for the workloads which are already in AWS.

The CloudEndure Agent is deployed on the foundation machines without requiring almost any reboot and without impacting performance. That initiates nearly continuous replication of this data into AWS. CloudEndure Disaster Recovery also provisions a low-cost staging area that helps decrease the cost of cloud infrastructure during replication, and until that machine actually must be spun up during failover or disaster recovery tests.

Whenever a customer experiences an outage, CloudEndure Disaster Recovery launches the machines in the correct AWS Region VPC and target subnets of one’s choice. The dormant lightweight state, called the Staging Area, is currently launched in to the actual servers which have been migrated from the foundation environment (the Oracle databases and SQL Servers, in this example). Among the top features of CloudEndure Disaster Recovery is point-in-time recovery, that is important in case of a ransomware event, as you may use this feature to recuperate your environment to a previous consistent time of your choosing. Quite simply, you can get back to the environment you’d before the event.

The device conversion technology in CloudEndure Disaster Recovery implies that those replicated machines can run natively within AWS, and the procedure typically takes just moments for the machines on top of that. You can even conduct frequent DR readiness tests without impacting replication or user activities.

Another service that’s ideal for data protection may be the AWS object storage service, Amazon Simple Storage Service (Amazon S3), where you are able to use features such as for example object versioning to greatly help prevent objects from being overwritten with ransomware-encrypted files, or Object Lock, which gives a write once, read many (WORM) treatment for assist in preventing objects from ever being modified or overwritten.

To find out more on creating a DR plan and a small business continuity plan, start to see the following pages:

#2 – Encrypt your data

Along with holding data for ransom, newer ransomware events increasingly use double extortion schemes. A double extortion is once the actor not merely encrypts the info, but exfiltrates the info and threatens release a the info if the ransom isn’t paid.

To greatly help protect your data, you need to always enable encryption of the info and segment your workflow in order that authorized systems and users have limited usage of utilize the key material to decrypt the info.

For example, let’s say that you’ve got a web application that uses an API to create data objects into an S3 bucket. Instead of allowing the application to possess full read and write permissions, limit the application form to only a single operation (for instance, PutObject). Smaller, more reusable code can be easier to manage, so segmenting the workflow also helps developers in order to work quicker. An example of this sort of workflow, where separate CMK policies are employed for read operations and write operations to limit access, is organized in Figure 3.

Figure 3: A serverless workflow that uses separate CMK policies for read operations and write operations

It’s vital that you remember that although AWS managed CMKs might help one to meet regulatory requirements for data at rest encryption, they don’t support customer key policies. Customers who wish to control how their key material can be used must use a customer managed CMK.

For data that’s stored locally on Amazon EBS, understand that as the blocks are encrypted through the use of AWS KMS, following the server boots, your computer data is unencrypted locally at the operating-system level. When you have sensitive data that’s being stored in your application locally, contemplate using tooling just like the AWS Encryption SDK or Encryption CLI to store that data within an encrypted format.

As Amazon Chief Technology Officer Werner Vogels says, encrypt everything!

Figure 4: Amazon Chief Technology Officer Werner Vogels wants customers to encrypt everything

#3 – Apply critical patches

For an actor to obtain access to something, they must benefit from a vulnerability or misconfiguration. Although some organizations patch their infrastructure, some only achieve this on a weekly or monthly basis, and that may be inadequate for patching critical systems that want 24/7 operation. Increasingly, threat actors be capable of reverse engineer patches or common vulnerability exposure (CVE) announcements in hours. You need to deploy security-related patches, especially the ones that are high severity, with the least level of delay possible.

AWS Systems Manager might help you to automate this technique in the cloud and on premises. With Systems Manager patch baselines, it is possible to apply patches predicated on machine tags (for instance, development versus production) but additionally predicated on patch type. For instance, the predefined patch baseline AWS-AmazonLinuxDefaultPatchBaseline approves all operating-system patches which are classified as “Security” and which have a severity degree of “Critical” or “Important.” Patches are auto-approved a week after release. The baseline also auto-approves all patches with a classification of “Bugfix” a week after release.

If you need a more aggressive patching posture, it is possible to instead develop a custom baseline. For instance, in Figure 5, I’ve created set up a baseline for several Windows versions with a crucial severity.

Figure 5: A good example of the creation of a custom patch baseline for Systems Manager

I could then setup an hourly scheduled event to scan all or section of my fleet and patch predicated on this baseline. In Figure 6, I show a good example of this sort of workflow extracted from this AWS blog post, gives a synopsis of the patch baseline process and covers how exactly to utilize it in your cloud environment.

Figure 6: Example workflow showing how exactly to scan, check, patch, and report through the use of Systems Manager

Furthermore, if you’re using AWS Organizations, this blog post will highlight ways to apply this technique organization-wide.

AWS offers many tools to create patching easier, and ensuring your servers are fully patched will help reduce your susceptibility to ransomware.

#4 – Follow a security standard

Don’t guess whether your environment is secure. Most commercial and public-sector customers are at the mercy of some type of regulation or compliance standard. You ought to be measuring your security and risk posture against recognized standards within an ongoing practice. In the event that you don’t have a framework you’ll want to follow, contemplate using the AWS Well-Architected Framework as your baseline.

With AWS Security Hub, you will see data from AWS security services and third-party tools in one view and in addition benchmark your account against standards or frameworks just like the CIS AWS Foundations Benchmark, the Payment Card Industry Data Security Standard (PCI DSS), and the AWS Foundational Security Best Practices. They are automated scans of your environment that can alert you when drifts in compliance occur. You can even opt for AWS Config conformance packs to automate a subset of controls for NIST 800-53, Health Insurance Portability and Accountability Act (HIPAA), Korea – Information Security Management System (ISMS), as well as a growing list of over 60 conformance pack templates during this publication.

Another essential requirement of following guidelines would be to implement least privilege at all levels. In AWS, you should use IAM to write policies that enforce least privilege. These policies, when applied through roles, will limit the actor’s capacity to advance in your environment. Access Analyzer is really a new feature of IAM which allows you to easier generate least privilege permissions, which is covered in this blog post.

#5 – Make certain you’re monitoring and automating responses

Be sure you have robust monitoring and alerting set up. Each one of the items I described earlier is really a powerful tool absolutely help drive back a ransomware event, but none will continue to work if you don’t have strong monitoring set up to validate your assumptions.

Here, I wish to provide some specific examples in line with the examples earlier in this article.

If you’re burning your data through the use of AWS Backup, as described in item #1 (Setup the capability to recover your apps and data), you ought to have Amazon CloudWatch create to send alerts whenever a backup job fails. When an alert is triggered, additionally you need to act onto it. If your reaction to an AWS alert email is always to re-run the job, you need to automate that workflow through the use of AWS Lambda. In case a subsequent failure occurs, open a ticket in your ticketing service automatically or page your operations team.

If you’re encrypting all your data, as described in item #2 (Encrypt your computer data), are you currently watching AWS CloudTrail to see when AWS KMS denies permission to a surgical procedure?

Additionally, are you currently monitoring and functioning on patch management baselines as described in item #3 (Apply critical patches) and responding whenever a patch isn’t in a position to successfully deploy?

Last, are you currently watching the compliance status of one’s Security Hub compliance reports and taking action on findings? Additionally you have to monitor your environment for suspicious activity, investigate, and act to mitigate risks quickly. That’s where Amazon GuardDuty, Security Hub, and Amazon Detective could be valuable.

AWS helps it be better to create automated responses to the alerts I mentioned earlier. The multi-account response solution in this blog post offers a good starting point which you can use to customize a reply in line with the needs of one’s workload.

Conclusion

In this website post, I showed you the very best five actions you could try protect and get over a ransomware event.

As well as the advice provided here, NIST has published guidance on preventing ransomware, which you are able to view in the NIST SP1800-25 publication.

When you have feedback concerning this post, submit comments in the Comments section below.

Want more AWS Security how-to content, news, and show announcements? Follow us on Twitter.

You must be logged in to post a comment.