Network Containers and Security – Same, but Different

Introduction

Network and security groups seem to experienced a love-hate connection with one another since the start of IT. Having proved helpful and built knowledge with both for recent decades extensively, we often see how each have comparable targets: both seek to supply connectivity and bring worth to the business. Simultaneously, you can find certainly notable differences furthermore. Network teams have a tendency to focus on constructing architectures that scale and offer universal connectivity, while safety teams have a tendency to focus even more on limiting that connection to avoid unwanted access.

Often, these teams interact – sometimes on a single hardware – where network groups will configure connectivity (BGP/OSPF/STP/VLANs/VxLANs/etc.) while security groups configure access handles (ACLs/Dot1x/Snooping/etc.). Other times, we discover that Safety defines hands and guidelines them away to Networking to implement. Often, in larger organizations, we discover InfoSec in the blend also, defining abstract policy somewhat, handing that right down to Protection to render into rulesets that either get applied in routers then, switches, and firewalls straight, if not handed off to Networking to implement inside those devices again. Nowadays Cloud teams have fun with an large component in those roles progressively, as well.

All-in-all, each united group contributes important parts to the bigger puzzle albeit speaking somewhat different languages, as they say. What’s essential to organizational success is for these united groups to come together, find and communicate utilizing a normal framework and language, and function to reduce the complexity surrounding protection controls while increasing the amount of security provided, which minimizes risk and adds value to the business enterprise altogether.

As container-based growth continues to expand, both the functions of who provides safety and where those protection enforcement factors live are fast changing, as well.

The challenge

For recent years, organizations have begun to improve their security postures significantly, moving from only enforcing security at the perimeter in a North-to-South fashion to enforcement throughout their internal Data Centers and Clouds alike within an East-to-West fashion. Granual control at the workload levels is known as microsegmentation typically. This shift toward distributed enforcement factors has great advantages, but presents unique new problems also, such as for example where those enforcement factors shall be located, how rulesets will be created, up-to-date, and deprecated when essential, all with exactly the same level of agility company and its own developers move at therefore, sufficient reason for precise accuracy.

Simultaneously, orchestration systems operating container pods, such as for example Kubernetes (K8S), perpetuate that shift toward new safety constructs using methods like the Container or even CNI Networking Interface. CNI provides just what it appears like: an user interface with which networking could be supplied to a Kubernetes cluster. A plugin, if you shall. There are various CNI plugins for K8S such as for example pure software program overlays like Flannel (leveraging VxLAN) and Calico (leveraging BGP), while some tie worker nodes working the containers in to the hardware switches they’re connected to directly, shifting the duty of connectivity into devoted hardware back.

Which CNI is utilized irrespective, instantiation of networking constructs is shifted from that of traditional CLI in a switch compared to that of sort of organized text-code, by means of YAML or JSON- that is delivered to the Kubernetes cluster via it’s API server.

We now have the groundwork laid to where we commence to observe how things might begin to get interesting.

Level and precision are crucial

As we can easily see, we are discussing having a firewall among each and every workload and making certain such firewalls are constantly up-to-date with the most recent rules.

Say we have a little operation with only 500 workloads relatively, every day a few of which were migrated into containers with an increase of planned migrations.

This means in the original environment we’d need 500 firewalls to deploy and keep maintaining without the workloads migrated to containers with ways to enforce the required rules for those, aswell. Today, imagine that a fresh Energetic Directory server has simply been put into the forest and retains the function of serving LDAP. Which means that a slew of brand-new rules should be added to just about any single firewall, enabling the workload protected because of it to chat to the brand new AD server with a selection of ports – TCP 389, 686, 88, etc. If the workload is Windows-structured it likely will need MS-RPC open – in order that indicates 49152-65535; whereas if it’s not just a Windows box, it almost all should not possess those opened certainly.

Rapidly noticeable is how physical firewalls become untenable as of this scale in the original environments, and also how dedicated virtual firewalls nevertheless present the complicated challenge of requiring centralized policy with distributed enforcement. Neither does very much to assist in our have to secure East-to-West visitors within the Kubernetes cluster, between containers. Nevertheless, one might precisely surmise that any remedy business leaders will probably consider must be in a position to deal with all scenarios similarly from the policy creation and administration perspective.

Apparently apparent is how this centralized policy should be hierarchical within nature, requiring definition using natural human language such as for example “dev cannot speak to prod” as opposed to the unmanageable and archaic technique using IP/CIDR addressing such as “deny ip 10.4.20.0/24 10.27.8.0/24”, yet the machine must translate that organic language into machine-understandable CIDR addressing still.

The only method this works at any scale would be to distribute those rules into each and every workload running atlanta divorce attorneys environment, leveraging the effective and native built-within firewall co-located with every. For containers, this implies the firewalls jogging on the employee nodes must secure visitors between containers (pods) within the node, along with between nodes.

< h3>Business agility and speed

To our developers back.

Businesses must move from the speed of marketplace change, which may be dizzying at periods. They must have the ability to program code, check-in that program code to an SCM like Git, own it pulled and built immediately, tested and, if approved, pushed into manufacturing. If everything properly works, we’re speaking between 5 minutes and some hours based on complexity.

Whether 5 minutes or five hrs, I have personally in no way witnessed a business environment in which a ticket could possibly be submitted to possess security policies updated to reflect the brand new code requirements, time and also hope to own it completed inside a single, forgetting for a short moment about insight accuracy and achievable remediation regarding incorrect rule entry. It really is between a two-day time and a two-week procedure usually.

That is unacceptable given the rapid development process we just described absolutely, not forgetting the dissonance encounter from disaggregated systems and folks. This technique is ripe with difficulties and may be the good reason security is indeed difficult, cumbersome, and mistake prone within most agencies. Once we shift to a far more remote workforce, the issue becomes even more compounded as relevant celebrations cannot so quickly congregate into “war areas” to collaborate through your choice making process.

The simple simple truth is that policy must accompany code and become implemented straight by the build process itself, which offers been truer than with container-based advancement never.

Simpleness of automating plan

With Cisco Secure Workload (Tetration), automating plan is easier than you might imagine.

Today when deploying programs on Kubernetes think that with me for an instant about how developers will work. They shall develop a deployment.yml file, where they are necessary to input, at the very least, the L4 port which containers could be reached. The developers have grown to be familiar with protection and networking plan to provision connectivity for his or her applications, but they might not be fully alert to how their program fits in to the wider scope of an institutions security posture and danger tolerance.

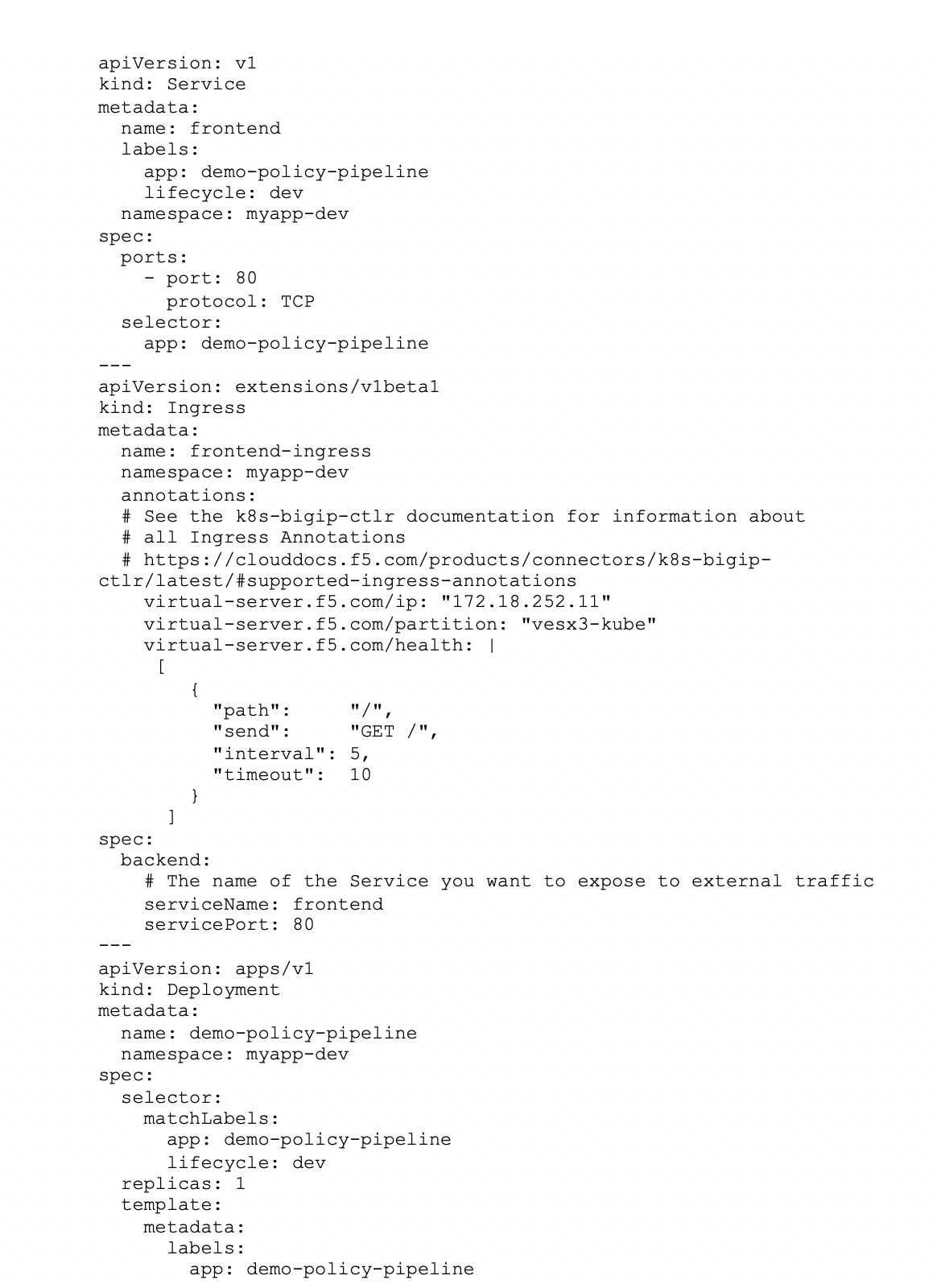

That is illustrated below with a straightforward exemplory case of deploying a frontend load balancer and a straightforward webapp that’s reachable on port 80 and can involve some connections to both a creation data source (PROD_DB) and a dev data source (DEV_DB). The sample plan for this deployment is seen below in this deploy-dev.yml document:

Now think about the minimum effort it could take to code yet another small yaml file specific simply because kind:NetworkPolicy, and also have that automatically deployed simply by our CI/CD pipeline at construct time to our Protected Workload policy engine that is built-in with the Kubernetes cluster, exchanging label information that people use to specify destination or source traffic, indeed also specifying the only real LDAP user that may reach the frontend app. An example policy for the aforementioned deployment is seen below in this ‘policy-dev.yml’ document:

As we can easily see, the amount of difficulty for the development groups is minimal quite, in-line with the prevailing toolsets they are acquainted with essentially, yet this yields for the organizations immense value as the policy will undoubtedly be automatically combined and checked against all existing safety and compliance plan as defined by the protection and networking teams.

Key takeaways

Enabling developers having the ability to consist of policy co-located with the program code it’s designed to protect, and automating the deployment of this policy with exactly the same CI/CD pipelines that deploy their program code provides businesses with rate, agility, versioning, plan ubiquity atlanta divorce attorneys environment, and provides them a solid strategic competitive benefit over legacy strategies ultimately.

If you’re interested now, this is simply the start of what can be performed with Cisco Safe Workload. There are plenty of benefits we weren’t in a position to here cover, and which are usually outlined inside another article titled “Empowering Developers to Protected Business” that you may want to have a look at.

Learn even more about Cisco Protected Workload here.