How to level your authorization needs through the use of attribute-based access manage with S3

In this website post, we demonstrate how to level your Amazon Simple Storage space Assistance (Amazon S3) authorization strategy instead of using route based authorization. You are likely to combine attribute-based access handle (ABAC) making use of AWS Identity and Accessibility Administration (IAM) with a typical Active Directory Federation Solutions (AD FS) linked to Microsoft Energetic Directory. You need to understand the idea of IAM functions and which you can use tags to include additional attributes to your IAM functions and to your items on Amazon S3. By the finish of this post you will learn how to make use of tags to increase authorization in to the domain of ABAC.

<p>With ABAC together with Amazon S3 guidelines, it is possible to authorize users to learn objects based on a number of tags that are put on S3 objects also to the IAM function session of one's users predicated on attributes in Dynamic Directory. This enables for fine-grained access handle beyond regular role-based accessibility handle (RBAC) in scenarios like a data lake, where composing and ingestion of the info is separated from customers accessing the data. The advantages of ABAC in this option are you need to provision fewer IAM functions and your S3 objects might have different prefixes with no need to explicitly include those prefixes to your IAM permissions plans as if you would with RBAC. The answer in this website post could be mixed with RBAC in the event that you curently have roles defined that want access in line with the prefix of one's S3 items.</p>

You shall learn to write reusable authorization guidelines in IAM, how exactly to configure your Advertisement FS and Dynamic Directory setup to use tags to your IAM role classes, and how exactly to secure tags on IAM roles and S3 items. The solution in this article includes Active AD and Directory FS hosted on Amazon Elastic Compute Cloud (Amazon EC2) instances, however the same methods apply if you host them in virtually any other environment. If another Security can be used by you Assertion Markup Vocabulary 2.0 (SAML) compatible identification provider (IdP) for usage of Amazon Web Providers (AWS), ask owner of your IdP should they support using custom made SAML promises and how exactly to configure them. The perfect solution is in this blog blog post facilitates server-aspect encryption with either Amazon S3-managed keys (SSE-S3) or perhaps a customer master crucial (CMK) stored inside AWS Key Administration Program (AWS KMS). AWS KMS doesn’t support the usage of tags for authorization when you utilize CMKs, the IAM part must have permissions to utilize the specified CMK in the main element policy.

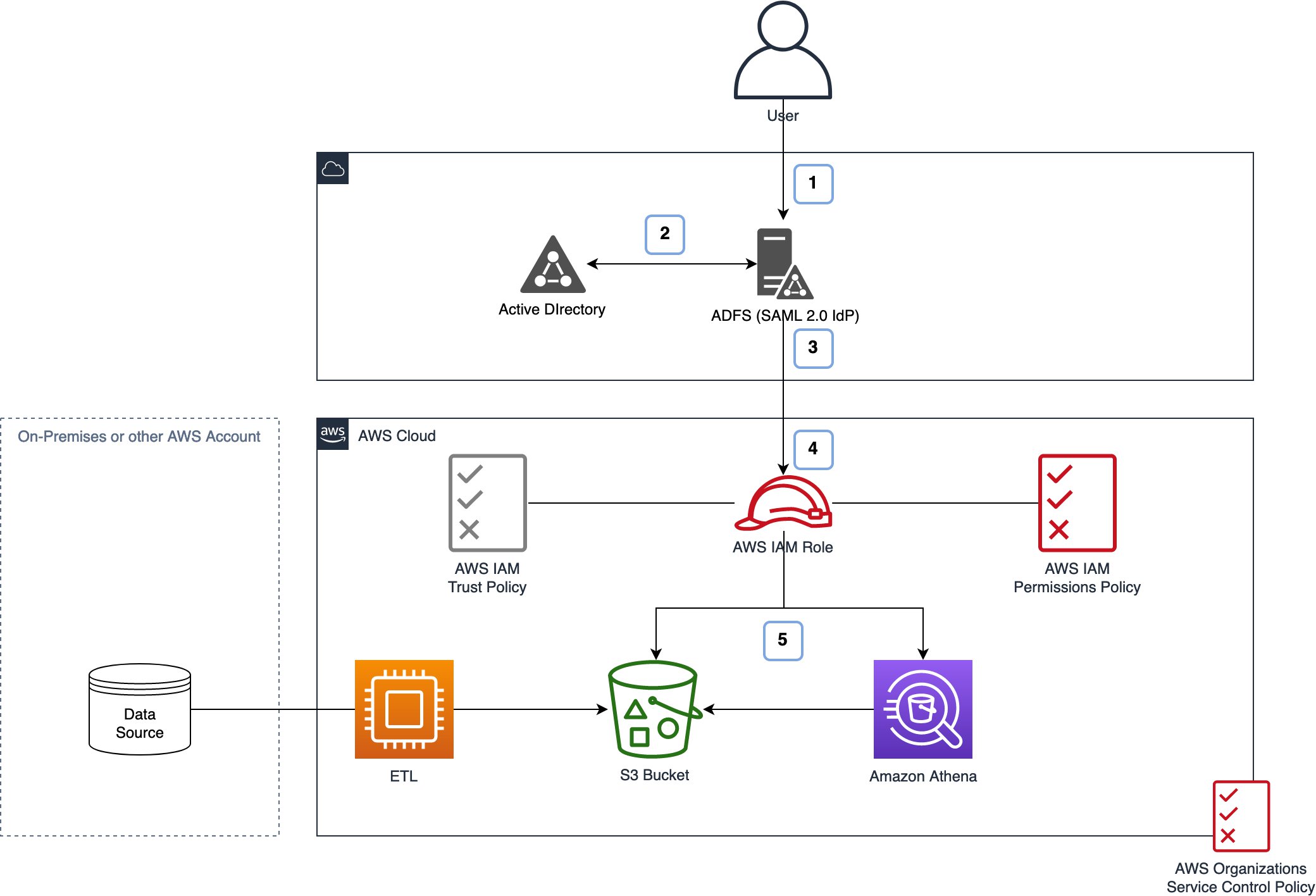

Architecture overview

This website post teaches you the steps to determine the workflow to securely access your computer data predicated on tags. It doesn’t demonstrate the extract, change, load (ETL) framework, as that is customer and solution particular often.

To determine the solution-excluding the ETL framework-you attach tags to both IAM principal-the function session-and the S3 items within a S3 bucket. You utilize IAM policy vocabulary to complement the tags between your IAM principal and the S3 objects to find out if a consumer is authorized to gain access to the S3 object that’s part of that one request. We also demonstrate how to protected your tags to greatly help prevent unauthorized adjustments to your computer data and the tags.

Figure 1: Architecture review

In Physique 1 you can find two major workflows described:

- In the initial workflow the users are querying data on Amazon S3, and we display the authentication workflow they’ll follow here.

- In the next workflow, your computer data ingest workflow functions data via ETL jobs into Amazon S3.

Once you have configured everything, an individual authentication shall follow the initial workflow illustrated in Figure 1:

- Consumer authenticates to your IdP-AD FS inside this full case.

- The IdP queries the identity store-Dynamic Directory in this case-to retrieve the tag values for the authenticated user.

- The tag comes by the identity store values to AWS-together with other information-in a SAML token.

- IAM checks the have faith in policy to find out if the IdP is permitted to federate the consumer into the specified part.

- Customers can access the info directly or even through another supported assistance utilizing the credentials and relative to the permissions granted.

Prerequisites

Before you obtain started, you’ll want the next components available:

- An AWS take into account your workload.

- Microsoft Active Advertisement and Directory FS. The AWS Federated Authentication with Dynamic Directory Federation Services (Advertisement FS) post describes how to established this up.

- Administrative usage of the accounts used as well as your AWS Companies management accounts in order to apply a ongoing program control policy.

- Administrative usage of your Energetic Directory and AD FS.

- A clear Amazon S3 bucket in the account of one’s workload. The answer presented runs on the bucket called < here;span>aws-blogpost-datalake-tbac.

- A databases that you could ingest into Amazon S3 or some sample information currently in Amazon S3. To check out the illustration in Query your computer data with Athena, develop a data occur .CSV format. Your desk should at the very least have two areas, of which you need to be named dept. Another fields are so that you can decide. Name your desk hr_dept.csv, upload it which means that your S3 bucket, and apply a tag with the main element section and worth HR.

LDAP and tags attributes

Begin by defining the tags you need to bottom your authorization on. Nearly all customers require guidance from AWS, however the right tags to use situation depend on your organization requirements. You need to define tags to use situation by functioning backwards from the customers of one’s data lake to find out what tags along with other technical capabilities you will need. After you’ve described the tags, they could be applied by you and define the policies. This blog post utilizes two tags: EmployeeType and Department.

For the tags in this website post, the Active can be used by you Directory LDAP attributes Department and Employee-Type. These attributes can be found inside the default schema for Active Directory already. If your preferences aren’t included in LDAP features that are offered by default, it is possible to extend your own schema to add your personal features. You attach these LDAP features as program tags to the function you set up. This real way, one role might have different tag ideals put on the role session based on who assumes the part. The characteristics are read from Dynamic Directory and mounted on the session of an individual if they assume that function.

Figure 2: LDAP features and tags

Because of this blog post, you are likely to configure four different customers, each making use of their own Dynamic Directory user accounts as outlined in Figure 2. Feel absolve to use existing customers if you don’t desire to create new customers. Two of them are usually from the HR division, and another two from Financing. Within each department, there’s one consumer of the employee kind confidential and another of the sort personal. That corresponds to the info classification scheme of the instance firm, which uses public, private, and confidential. The diagram in Number 2 exhibits how these consumer attributes are usually mapped to AWS.

As possible plainly see in Figure 2, there’s just a single IAM part deployed in AWS (DataEngineering), however the assigned program tags are special to each consumer assuming the role. This ongoing works exactly like RoleSessionName in a normal federated setup. To do this, you must know what LDAP attributes you should map to which tags in IAM. To include LDAP features to your Advertisement FS construction, you take the next steps:

Define SAML claims in Advertisement FS

As stated in the prerequisites area, you ought to have AD FS currently setup with the minimum group of claims and state rules essential to establish federation to your AWS accounts. In this task you will add a supplementary claim rule to add the program tags in the SAML token when assuming the AWS IAM function.

To open the AD FS fresh claim rule windowpane

- Open up the AD FS management tool on the EC2 instance that AD FS is installed on.

- Pick the Relying Celebration for AWS in the Advertisement FS administration device.

- To open the existing claim issuance plan, choose Edit State Issuance Plan.

- To include a new claim guideline, choose Add Principle.

- Select Send LDAP Features as Promises in the state rule template listing.

Given that you’ve opened the Advertisement FS management tool and have attained the display screen to define a fresh claim principle, it’s time and energy to enter the settings mainly because shown in Figure 3.

To define the brand new claim rule in Advertisement FS

- For State rule title, enter SessionTags.

- For Attribute shop, choose Energetic Directory.

- Add 2 rows to the Mapping of LDAP features to outgoing claim varieties desk.

Ideals for row among your new claim guideline

- For LDAP Attribute, enter Employee-Type.

- For Outgoing Declare Type, enter the next:https://aws.amazon.com/SAML/Characteristics/PrincipalTag:EmployeeType

Ideals for row 2 of one’s new claim principle

- For LDAP Attribute, enter Department.

- For Outgoing State Type, enter the next:https://aws.amazon.com/SAML/Features/PrincipalTag:Department

To save lots of your new claim guideline

- Choose Alright and Apply.

- The AD FS administration tool near. Your brand-new claim rule immediately works well. You don’t have to restart Advertisement FS.

Figure 3: Add new claim principle

Blend tags for authorization choices

Before you move ahead to another steps, it’s vital that you know how these tags interact. In the example, customers which have the tag EmployeeType:Confidential are permitted to access items with all three classifications-public, personal, and confidential. Customers with the tag EmployeeType:Personal can only just access data that’s tagged and classified simply because public and private, but not confidential. This logic is made by you in the permissions policy within the next step.

Information ingestion and item tagging

It’s vital that you recognize that for tag-based ABAC to work, your items must accordingly be tagged. What we’ve seen work with customers is to make sure that their ETL framework that’s responsible for ingesting information into Amazon S3 tags the items on ingestion.

This solution describes fine-grained control that depends on S3 object tags. These tags will vary than tags put on S3 buckets. The power is that inside a bucket-or an accumulation of buckets-you don’t need to specify the prefix of items to authorize users, which increases your potential scale greatly. Instead of needing to add a huge selection of prefixes to a permissions plan, you can achieve exactly the same outcome with several lines of policy vocabulary.

As the solution described requires tags on every object, you need to apply these tags to each object individually. In the event that you don’t want fine-grained access inside your buckets but desire to use tags still, you may use an AWS Lambda function set off by an Amazon S3 information event when fresh objects are created to use your bucket tags to the thing automatically.

IAM permissions

The next thing is to create the IAM role with the right permissions for accessing your objects on Amazon S3. To authorize customers to Amazon S3, you may use possibly an IAM policy or an S3 bucket policy, provided that the IAM part and the S3 bucket come in the same AWS accounts.

Be aware: In this website post, you use an individual AWS account, but a cross-account configuration enables you to boost your scale further in a few scenarios even. You don’t have to explicitly deny activities in the bucket plan because a user should be authorized in both IAM plan and the S3 bucket plan in a cross-account situation. However, this will raise the complexity of one’s environment.

The IAM can be used by you policy to create the essential allow statements, and the S3 bucket plan with three deny statements to create boundaries. This avoids duplication of plan vocabulary and helps enforce protection centrally. Placing the deny statements in the S3 bucket plan prevents anyone from developing an IAM function with permissions that don’t regard these deny statements-an explicit deny can’t be overruled by an enable statement.

Notice: For a whole summary of authorization to Amazon S3 see IAM Bucket and Policies Plans and ACLs! Oh, My! (Controlling Usage of S3 Resources).

Create the IAM part

To generate an IAM function with a custom made IAM plan

- Follow the task for Developing a role pertaining to SAML through action 8.

- After Phase 8, select Create plan to produce a policy.

- Select JSON and replace the empty plan with the next IAM policy:

"Version": "2012-10-17", "Statement": ["Sid": "ReadBucket", "Effect": "Allow", "Action": [ "s3:GetBucketAcl", "s3:ListBucket", "s3:ListBucketVersions" ], "Source": "arn:aws:s3:::aws-blogpost-datalake-tbac" , "Sid": "AccessTaggedObjects", "Effect": "Allow", "Action": [ "s3:GetObject", "s3:GetObjectAcl", "s3:GetObjectTagging", "s3:GetObjectVersion", "s3:GetObjectVersionAcl", "s3:GetObjectVersionTagging" ], "Reference": "arn:aws:s3:::aws-blogpost-datalake-tbac/*", "Condition": "StringLike": "s3:ExistingObjectTag/Department": "$aws:PrincipalTag/Department"]

<li>Choose <strong>Evaluation plan</strong>.</li> <li>Enter <period>DataEngineeringABACPolicy</period> because the <strong>Title</strong> for the policy.</li> <li>Choose <strong>Create plan</strong> to complete the creation of one's new plan.</li> <li>Go back to the task <a href="https://docs.aws.amazon.com/IAM/most recent/UserGuide/id_roles_generate_for-idp_saml.html#idp_saml_Create" focus on="_blank" rel="noopener noreferrer">Developing a role with regard to SAML</the> and keep on with <strong>Stage 9</strong>. Pick the <strong>Refresh</strong> button inside the very best right corner and choose your brand-new policy-named < then;period>DataEngineeringABACPolicy</period>.</li> <li>When asked for a genuine name for the new role, enter <period>DataEngineering</period>.</li>The permissions policy allows access and then data that has exactly the same tag value for Section as that of an individual assuming the part. The problems map the tags put on the role program of the individual customers to the tags put on items in your S3 bucket.

The trust policy to the IAM role< apply;/h3>

The next thing is to improve the trust policy connected with your freshly created IAM role. That is vital that you ensure the IdP can apply the required tags to your IAM function sessions.

To use the trust plan

- Register to the AWS Administration Console and open up the IAM gaming console.

- In the routing pane of the IAM console, choose Functions and choose your created part named < newly;period>DataEngineering.

- Choose Have faith in relationship and choose < then;strong>Edit trust connection.

- Replace the existing trust plan under Policy Record with the next policy:

"Version": "2012-10-17", "Statement": ["Sid": "AllowFederation", "Effect": "Allow", "Action": "sts:AssumeRoleWithSAML", "Principal": "Federated": "arn:aws:iam::123456789012:saml-provider/ADFS" , "Condition": "StringLike": "aws:RequestTag/Division": "<em>", "aws:RequestTag/EmployeeType": "</em>" , "StringEquals": "SAML:aud": "https://signin.aws.amazon.com/saml" , "Sid": "AllowSessionTags", "Effect": "Allow", "Action": "sts:TagSession", "Principal": "Federated": "arn:aws:iam::123456789012:saml-provider/ADFS" , "Condition": "StringLike": "aws:RequestTag/Section": "<em>", "aws:RequestTag/EmployeeType": "</em>" , "StringEquals": "aws:RequestTag/EmployeeType": [ "Public", "Private", "Confidential" ]]

- Choose Update Faith Plan to perform changing the trust plan of your IAM function.

When you have knowledge creating functions for a SAML IdP, the initial declaration in the preceding IAM part trust plan should look acquainted. It enables the IdP that’s create in your AWS accounts to federate customers into this function with the motion sts:AssumeRoleWithSAML . There’s an extra condition to ensure your IdP is able to federate customers into this role once the Division and EmployeeType tags are usually set. The second declaration enables the IdP to include session tags to part sessions with the actions sts:TagSession . Furthermore, you’ve defined two circumstances: The initial condition does exactly like the situation specified on your own first statement-it prevents federating customers in if those two tags aren’t used. The second problem adds another restriction which makes sure the tag ideals for EmployeeType can only just be among those three- open public , personal , or confidential . That is especially ideal for tags where you’ve got a predefined set of tag values.

Define the S3 bucket plan

Because you can have noticed, there aren’t any situations for the info classification that needs to be applied utilizing the LDAP attribute and tag called EmployeeType . The authorization logic for the S3 bucket utilizing the EmployeeType tag is really a bit even more complicated-see the sooner statement about how exactly authorization is founded on data classification- which means you utilize the S3 bucket plan with deny statements in order that gain access to is explicitly denied in case a consumer doesn’t have the correct tag applied.

To use a bucket plan to your S3 bucket

- Register to the system and open up the Amazon S3 gaming console .

- From the set of S3 buckets demonstrated in the console, choose the S3 bucket that you will be using for this post.

- On the routing bar, choose Permissions .

- Select Edit close to Bucket Plan .

- Replace the existing S3 bucket plan under Plan with the next policy:

- Select Save Adjustments to apply your brand-new bucket policy.

The S3 bucket plan you applied carries a declaration that denies tagging of S3 objects for the two tags by any principal apart from your ETL framework. The declaration also denies entry for the various data classifications in line with the values of the thing tag and the main tags-which are your part program tags. The bucket plan also contains a statement which will deny usage of data if the thing doesn’t possess the tag EmployeeType mounted on it. Feel absolve to change this in accordance with your needs. The advantage of getting these explicit deny statements in the S3 bucket plan is usually that no IAM plan can overwrite these deny statements having an allow statement. You will need these deny statements to aid the info classification feature also, because the policy language necessary for the data classification function isn’t enforced in your IAM permissions plan.

Protected your tags utilizing an AWS Organizations service handle policy

As you are counting on tags for authorization to information, you want to restriction who is in a position to tag information on Amazon S3 as well as your IAM principals. You currently utilized an S3 bucket plan to avoid any principal apart from your ETL framework from using tags. The next service control plan (SCP) prevents the modification of the bucket plan. The SCP furthermore prevents placing tags on AWS IAM principals as these will undoubtedly be used on a per-session basis. To do this, you utilize an SCP to the AWS accounts which has your ETL framework and the S3 bucket together with your information. If your ETL framework works in another AWS account, you need to apply the policy compared to that account also. This must be completed in the management accounts of your company .

To generate and connect an SCP

- Adhere to the task for Generating an SCP through step 4 .

- Replace the existing service control plan as proven under Plan with the next policy:

- Finish producing the policy by selecting Create plan .

- To add the SCP to your AWS accounts, follow the task for Attaching an SCP .

Considering the preceding SCP, you can view you are denying tagging of IAM customers and roles aside from an IAM role known as admin-part . You haven’t utilized any IAM customers in the example alternative, but still desire to prevent anybody from developing a consumer and gaining usage of your data. The final statement would be to prevent principals from modifying the S3 bucket plan that contains statements to safeguard your tags. Just the function admin-part is permitted to delete or upgrade S3 bucket plans in the accounts this SCP is mounted on.

Take note: If you are using your ETL framework to generate your S3 buckets instantly, you need to replace the function ARN in the aforementioned policy with the part ARN of one’s ETL framework function.

Query your computer data with Athena

Amazon Athena can be an AWS managed provider which allows you to query your computer data on Amazon S3 making use of standard SQL language. To show that Athena works in conjunction with tags furthermore, let’s appear at how exactly to query your tagged information. What is important to notice, will be that Athena can only just work a query if all of the data objects from the exact same dataset or data desk can be found. This is why it’s important that when querying information kept on Amazon S3, exactly the same tags are usually put on all the objects owned by a standard dataset. Before continuing, you’ll want already uploaded a information place into Amazon S3 as stated in the prerequisites, and utilized AWS Glue to populate an AWS Glue Information Catalog . AWS and athena Glue support several file formats, including CSV, JSON, ORC and parquet. In order to utilize this example, you’re set by the info have uploaded will need to have a industry named section to utilize to query the desk.

To query the info on Amazon S3 making use of Athena

- Register to the system using AD FS among the HR employees described earlier in Tags and LDAP features and open up the Athena gaming console .

- Query the hr_dept desk on Amazon S3 as referred to in Working SQL Queries Making use of Amazon Athena . The query should come back results much like those shown in Body 4.

<div id="attachment_19523" course="wp-caption aligncenter"> <img aria-describedby="caption-attachment-19523" course="size-full wp-picture-19523" src="https://infracom.com.sg/wp-content/uploads/2021/03/fb_image-32-scaled.jpeg" alt="Figure 4: Prosperous Amazon Athena query" width="2852" elevation="1338"> <p id="caption-attachment-19523" course="wp-caption-text">Figure 4: Successful Amazon Athena query</p>In case you are querying a table having an item tag that you don’t have permissions to, after that an access is came back simply by the query denied message mainly because shown in Figure 5. In this illustration, the failure is basically because the part used gets the tag Department:Financing of < instead;span>Section:HR.

Figure 5: Failed Amazon Athena query

Troubleshooting< and wrap-up;/h2>

In the event that you come across any presssing issues, you may use AWS CloudTrail to troubleshoot. To verify if the tags are usually put on your role sessions properly, CloudTrail can demonstrate which tags are put on the role program.

In this website posting, you’ve seen how exactly to use program tags in conjunction with your Dynamic Directory to level your authorization requirements beyond RBAC. You’ve furthermore learned the actions you can take to safeguard tags and tagging permissions to avoid escalation of permissions inside your AWS accounts. It is very important understand how tags interact and to engage your organization stakeholders to comprehend what tags are essential for authorization reasons in your atmosphere. Tags should reflect your computer data access requirements, so be sure you edit the policies in this article to reflect your functions and tags.

Should you have feedback concerning this post, submit remarks in the Remarks area below.

Want a lot more AWS Security how-to articles, news, and show announcements? Stick to us on Twitter.