Apple must act against phony app-privacy promises

Apple will have to become more aggressive within how it polices the privacy claims developers create when promoting apps within the App Shop . So what can enterprise customers do to safeguard themselves and their customers in the meantime?

What’s the issue?

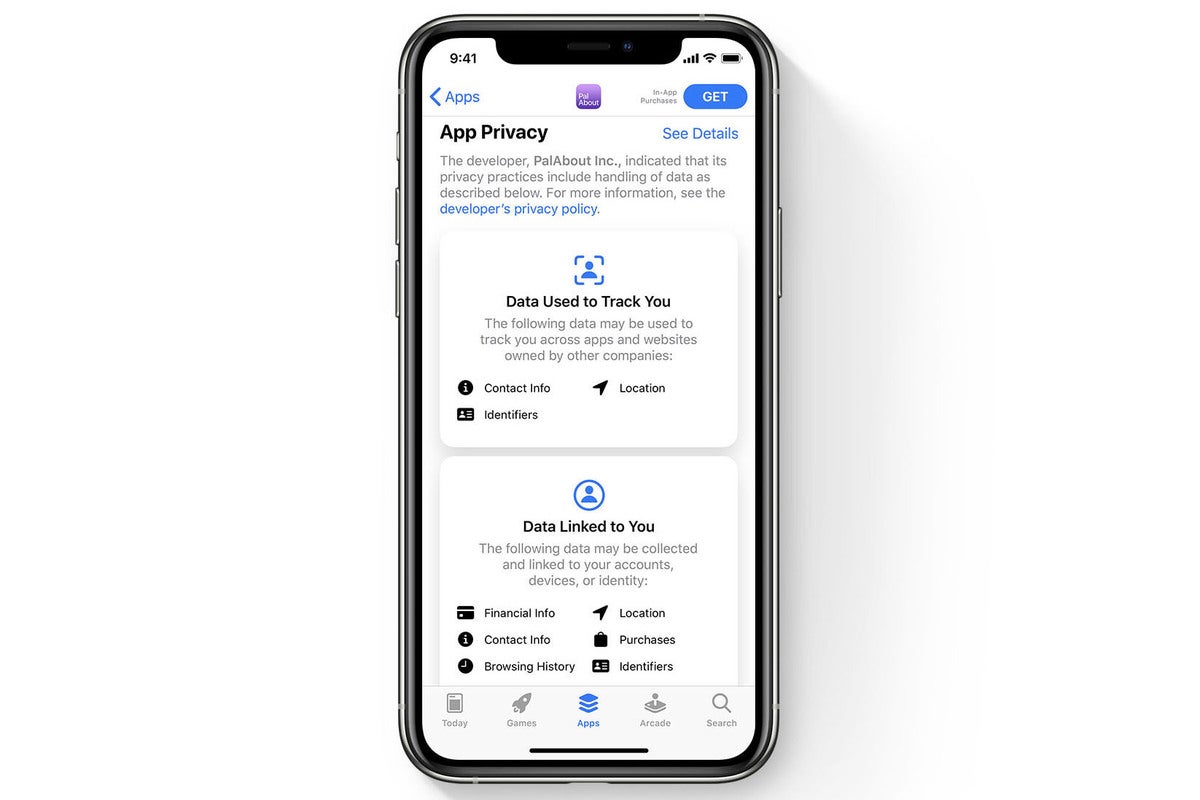

Some developers continue steadily to abuse the spirit of Apple’s App Store Personal privacy rules. This reaches posting misleading info on App Privacy Labels, alongside outright violation of promises never to track gadgets . Some developers continue steadily to ignore do-not-monitor requests to exfiltrate device-tracking information.

The Washington Write-up , which lately launched its digital ads system , has determined multiple instances where rogue App Store apps neglect to maintain a guarantee of user personal privacy .

When a user states they don’t want an app to monitor them, the app should respect that request. However the report cites many cases where the apps continue steadily to harvest exactly the same information, no real matter what the consumer requests. This data may be sold to third-party information tracking firms, or used to supply targeted advertising, the record states. What it doesn’t state is that failing to respect user wants is really a betrayal of trust.

What will help?

The Post provides spoken to ex-iCloud engineer, Johnny Lin, that argues that: “With regards to stopping third-celebration trackers, App Monitoring Transparency is really a dud. Worse, offering users the choice to tap an ‘Request App NEVER TO Track’ button could even provide users a fake sense of privacy.”

That’s the harsh criticism also it seems appropriate to see a pastime is had by that Lin here. His company evolves Lockdown , which blocks “tracing, ads and badware” in every apps, not Safari just. Apple should adopt exactly the same approach perhaps. But provided the a few months of pushback the business confronted when it introduced App Monitoring Transparency, at Apple’s scale achieving this can devote some time. Surveillance capitalism includes a lot of cash to invest opposing such plans; since it stands users, enterprise users particularly, should take methods to protect themselves.

We perform need some schooling

Another approach will be education. Each right period we see privacy issues appear, we also appear to experience claims a amount of these rogue apps can be found in the proper execution of bite-sized enjoyment titles targeted at casual gamers and kids.

Needless to say, an app actively grabbing data doesn’t brain if it’s the mother or father who installed the app, or if it had been the parent’s kid on a borrowed smartphone.

Users should try to learn to end up being discerning around apps they make use of really. With regards to child-centered pester power, I’d argue the safest approach is to use Apple Arcade and let your kids play anything they need from there. It’s not really ideal, nonetheless it is one method to limit risk.

Embrace (but verify) gray IT apps

A third approach which should work is plan development. Enterprises should appearance carefully at the apps utilized by employees on the devices to make sure they pass security plan.

Usage of MDM techniques and managed Apple IDs for the business part should increase, while enterprises should function closely with employees to recognize apps they use really. Several companies now have trouble with gray IT , apps and services employees use to obtain work done easily because these systems are better than the tools the business provides. Generally, prohibition doesn’t work.

A better approach would be to identify those apps and vet them against business security plan and transparently describe why some can’t be used. This should be coupled with work to make sure your own apps are in least as simple to use as grey market alternatives. This switched-on approach enhances personal autonomy across your teams better than autocratic diktats far. The theory is that by dealing with teams together, you wind up with a far more secure space. It is possible to supplement this with classic MDM options.

Karma police

But exactly what will make the largest difference is policing. Apple already says this shall use developers who neglect to uphold the personal privacy promise, but it must toughen this process perhaps. I’d argue that it will proactively keep track of all apps contrary to the personal privacy promises they create to ensure they satisfy those promises.

Those that don’t ought to be removed.

It’s also never to vet only particular apps identified by exterior parties enough. If a programmer has been discovered to abuse privacy using one app, almost all their apps ought to be checked then.

Educated security and consumers researchers might help with this, using apps such as for example Small Snitch , Lockdown, Jumbo , Disconnect.me , and a range of others to keep track of action generated by apps. If an app promises personal privacy it must be held to accounts, and something way to achieve this is by using apps like these to keep track of privacy leaks, and tell Apple when an app is identified by you that leaks data without your permission.

This process – of studying risks, dealing with your internal groups (family, employees, children) to control and minimize risk, and aggressive attempts to recognize apps that neglect to keep their privacy promise – should help to make the environment more difficult for such egregious attacks.

What can happen next

Despite Apple’s efforts, what’s happening now could be that we’re being provided a fake sense of security whenever we consider an app’s online privacy policy on the App Shop. When an app programmer promises never to steal our details, or if they are asked simply by us never to track us, we are inclined to trust them. For Apple, the next phase is to vet and verify all of the apps it markets to ensure they keep carefully the personal privacy promises they make.

To my mind, personal privacy fraud is really as bad as any sort of fraud just. Apple already polices the apps for fraudulent habits and this past year rejected 150,000 apps to be spam, copycats, or even misleading to users.

It needs to accomplish exactly the same for privacy cheats right now.

Please stick to me on Twitter , or sign up for me in the AppleHolic’s bar & grill and Apple Discussions groupings on MeWe.